Sociocybernetic understandings of consciousness

Bernard Scott

International Center for Sociocybernetic Studies

Bernard.Scott@sociocybernetics.eu

RC51, Colombia, 2017

Personal page:

http://peacefromharmony.org/?cat=en_c&key=255

Abstract

The aim of this paper is to show how sociocybernetics can usefully combine biological, psychological and sociological concepts to provide conceptual clarification and insightful understandings of human consciousness. Following a brief discussion and critique of how the term “consciousness” is used in contemporary cognitive science, awareness and consciousness are characterised in cybernetic terms as the dynamics of self-organising, autopoietic systems and their interactions. Sociocybernetic models of conscious systems are presented and discussed. It is argued that in order to characterise human consciousness it is necessary to make a distinction between bio-mechanical systemic unities and psychosocial systemic unities. Reflexively, this gives rise to a second-order cybernetics in which the observer explains herself to herself. Finally, there is a discussion of how sociocybernetic understandings of consciousness can give guidance for how to create and sustain communities in which good will prevails.

Keywords consciousness, awareness, sociocybernetics

Introduction

“Everything is in interaction and reciprocal”, Alexander von Humboldt (1769 – 1859). Cited in Wulf, The Invention of Nature (2015, p. 59).

The aim of this paper is to show how sociocybernetics, using abstract concepts from cybernetics, can usefully combine biological, psychological and sociological concepts to provide conceptual clarification and insightful understandings of human consciousness. The cybernetic concepts that are central in my account include feedback, circular causality, self-organisation, adaptation, organisational closure, autopoiesis and variety management. I also draw on ideas, models and empirical work that I have discussed in a number of previous papers and weave them together to construct what I hope is a coherent narrative.

The motivation for writing this paper is to add to our understanding of the human condition. As a species, we live in ‘an age of unreason’, in which we are destroying the ecosystem that supports us (our home), we are killing and oppressing each other, population growth is out of control, we have pathological belief systems in so-called ‘religious faiths’, in science, politics and economics.

The paper is structured as follows. There is a brief discussion and critique of how the term ‘consciousness’ is used and abused in contemporary neuroscience and cognitive science (philosophy of mind, cognitive psychology and artificial intelligence). As a preface to a cybernetic approach to this topic, there is a brief discussion of reflexive cosmogony and process metaphysics. This is followed by a cybernetic characterisation of awareness and consciousness. With these characterisations, we can then say that conscious systems are objects of study in sociocybernetics.Some sociocybernetic models of conscious systems are then presented and discussed. There is then a discussion of how these sociocybernetics understandings of consciousness can give ethical guidance for how to characterise, create and sustain “healthy” communities in which good will prevails. Finally, there are some concluding comments.

Use of the term ‘consciousness’

In cognitive science (which includes cognitive neuroscience, artificial intelligence and philosophy of mind) “consciousness” is frequently treated as a kind of “essence” found in subjective experience. Explaining how this essence arises – or may arise – in natural and artificial is referred to as the ‘hard problem’. This approach may be seen as dualistic, in that an ontological distinction is made between the world of experience (mind, subjectivity) and the world of matter (brain and body) and also as interactionist, in that a search is made in the latter for that which gives rise to the former. In contrast, in cybernetics, and its precursors in the American pragmatist philosophy (for example, in William James Principles of Psychology, first published in 1898), it assumed that for all subjective experience there is a material (brain, body) correlate, captured in the aphorism, “A thought in the head is like a fist in the hand.”

I propose that the term “consciousness” should be used as proposed by the cyberneticians Warren McCulloch (1965), Heinz von Foerster (2003), Gordon Pask (1975, 1986) and Richard Jung (2007) to refer to “knowing with” (L. con-scire), where the knowing can be with another or with oneself. This usage distinguishes consciousness as a primarily human phenomenon from the more general phenomenon of “awareness” observed in living systems.

Pask, in several early papers and in his book (1975) book, Conversation, Cognition and Learning, presents a cybernetic account of awareness. Awareness is characterised cybernetically as the dynamics of a self-organising, autopoietic (pace, ‘organisationally closed’) system. Such systems are active ‘eaters of variety’. They seek novelty by exploring, and thus enlarging, their environmental niches. They actively adapt to environmental perturbations in an ongoing process of variety (uncertainty) generation by exploration and variety (uncertainty) reduction by anticipation. This process can be found in all living systems. According to Pask, it finds its highest expression in humans, who exhibit “a need to learn” (Pask, 1968, p.1). This thesis is the cornerstone of Pask’s work on adaptive teaching machines, in which the machines aim to optimise the rate of learning in human learners by presenting problems of increasing difficulty as learning takes place, in such a way as to avoid boredom or overload.

In summary, an observer may distinguish all living systems as showing awareness. She may attribute consciousness to those with whom awareness can be shared as a ‘knowing with’ in a conversational interaction.

Reflexive cosmogony and process metaphysics

“Life is ineluctable and ineffable” (Gordon Pask); “There are undecideables” (Heinz von Foerster); “The world .. is constructed in order to know itself .. Whatever it sees is only partially itself.” (George Spencer-Brown).

I begin this section with the above quotations to emphasise that we humans are a mystery that is part of a mystery. We are faced with undecideable questions such as: How did the world begin (cosmogony)? Is there a purpose to it all? What is life? How does the body work? Are there transcendentals? What happened before the big bang? As von Foerster emphasises, as human beings our ultimate freedom resides in how we choose to answer these undecideable questions. Our answers about our world take the form of stories we tell ourselves, cosmogonies. Insofar as these stories address questions about who, what and why we are, they are reflexive cosmogonies. Where should our stories begin? Answering this question takes us into the realm of metaphysics. Here are some examples of metaphysical starting points.

Pleroma (formless stuff) and Creatura ( the world of distinctions) (Carl Jung, 1916, also cited by Gregory Bateson, 1972); Void (full emptiness) and Not-Void (empty fullness) from Hindu philosophy; Indefiniteness and Form (Richard Jung, 2007); the void and the form of distinction (George Spencer-Brown, 1969). Essentially, these distinctions are saying the same thing: there is the world of undifferentiated, undescribable all; there is the world of distinction and description constructed by observers. Alfred Korzybski (1933) refers to the former as ‘the territory’ and the latter as ‘the map’ in his famous aphorism, “The map is not the territory.”

From classical times, a distinction has been made between cosmogonies that emphasise what the world is made of (its being) as ultimate, unchanging essence or substance and those that emphasise the processes of change (the world’s becoming) as the only constant. To anticipate, cybernetic theories are oriented towards process, how things behave, and look for explanation not in what those things are made of but in how they are organised. Aristotle, often cited as the ‘father of biology’ as well as the ‘father of logic’ has also been claimed (by Gregory Bateson amongst others) as the ‘father of cybernetics’ To anticipate the discussion of explanation in cybernetics, the reader may find it helpful to recall Aristotle’s doctrine of the four ‘causes’ necessary to have knowledge of the world around us. In brief, the four causes are ‘material cause’ (what a thing is made of), ‘necessary cause’ (what had to happen to bring the thing about), ‘formal cause’ (the form or idea of a thing), ‘final cause’ (the purpose to which a thing is put).

Erhardt von Demarus, in his (1967) thesis “The Logical Structure of Mind”, offers a variant on Aristotle’s schema. He takes the concept of ‘an occasion of experience’ from the ‘organic realist’ process philosophy of Andrew North Whitehead and applies it phenomenologically to the experience of an observer. For von Demarus, such an occasion of experience has four aspects: ‘passage’ in time, ‘extension’ in space, ‘idea’ (the forms distinguished by the observer) and ‘intention’ (the purpose of the observer).

In similar spirit, Richard Jung in his (2009) book, Experience and Action, develops a cybernetic phenomenology in which he distinguishes four explanatory metaphors: two for things that move or behave (ens movens): ‘organisms’ (which respond to stimuli), ‘machines’ ( which perform); and two for things that show purpose (ens volens): ‘mind’ (intentions to act), and ‘templates’ (a ‘semantic plexus’, rules for conduct).

The significance of these schema for cybernetics is that they make clear the richness of phenomena that the study of purposive systems must take into account, whether building purpose (anticipation, goal seeking, goal maintenance, adaptation) into mechanical systems or explaining and modelling purpose in biological, psychological and social systems.

Cybernetic Modelling and Explanation

Ashby (1956 p. 2) states that cybernetics is the study of “all possible machines”. Ashby uses ‘machine’ as a synonym for ‘system’ where a system is that which persists. In Ashby’s view the abstract principles, concepts and principles of cybernetics can be used to model any ontological category of system. Cybernetic models feature ‘circular causality’, circuits in which signals about the outcomes of processes are fedback so as to control those processes. Cybernetic models are explanatory. They aim to show how a system is organised. They require interpretation as part of a narrative. Thus a theory is a model together with its interpretation.

In similar spirit, Gordon Pask (1975a, p. 13) states “Cybernetics is the science or art of manipulating defensible metaphors; showing how they can be constructed and what can be inferred as a result of their existence.”

Our interest here is in modelling living systems, which cybernetics categorises as self-organising and autopoietic, self-creating (Maturana and Varela, 1980).I like von Foerster’s succinct definitions: “Autopoiesis is that organization which computes its own organization”; “Autopoietic systems are thermodynamically open but organisationally closed.” (von Foerster, 2003, p. 281).

A propos of our interest in conscious systems, those with which the observer may converse, it is useful to note Pask’s (1969) distinction between taciturn systems and language oriented systems. Taciturn systems are distinguished and observed by an external observer who infers or builds in their goals. Language oriented systems are self-distinguishing and set their own goals. They are interacted with (conversed with) by a participant observer. Aspects of Pask’s distinction were later summed up by von Foerster in his (1974) distinction between a first order cybernetics (the cybernetics of observed systems) and a second order cybernetics (the cybernetics of observing systems, the observation of observation). Thus to attempt to model and understand conscious systems is to study language oriented systems and to engage in second order cybernetics.

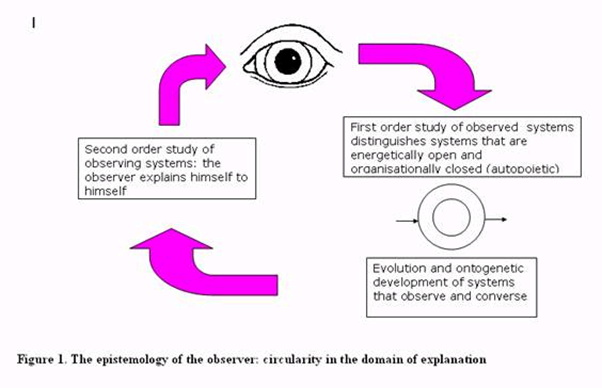

With second order cybernetics, the observer is explaining herself to herself in a never-ending hermeneutic narrative and conversational circularity, a spiral of storytelling, agreements, disagreements, understandings and misunderstandings (see figure 1). Here we see the limits of what can be modelled, what can be explained, as alluded to in our earlier discussion of metaphysics and undecideable questions. As Pask (1969) points out, these limits should not be taken as a reason for despair, rather they show the open-ended and creative nature of our attempts to understand ourselves and the world we live in. We can hope for deeper and better understandings of what it is to be human.

Von Foerster insists that social cybernetics is a second order cybernetics: “Social cybernetics must be a second-order cybernetics – a cybernetics of cybernetics – in order that the observer who enters the system shall be allowed to stipulate his own purpose … If we fail to do so, we shall provide the excuses for those who want to transfer the responsibility for their own actions to somebody else.” (von Foerster, 2003, p. 286)

In contrast to positivist approaches to the social sciences (e.g., network science, complexity studies and other approaches in the systems sciences), I agree with a majority of the great sociologists (Max Weber, Emile Durkheim and Talcott Parsons, for example) that a distinct category of “the social” is required in the social sciences. This accords well with our earlier discussion of von Demarus’ metaphysical categories: passage, extension, idea and intention (von Demarus).

In the theorising that follows, I make an analytic distinction between organisationally closed bio-mechanical systemic unities, which exhibit passage and extension and organisationally closed psychosocial systemic unities which exhibit idea and intention. I have taken this distinction from Pask who in his cybernetic theory of conversations, refers to the former as Mechanical (M) Individuals and the latter as Psychological (P) Individuals. A P Individual (qua psychosocial unity) has the organisational form of a conversation and may be embodied in one or more M Individuals.

Sociocybernetic models of conscious systems

Cybernetic models help us understand how the brain functions as a complex command and control system. Classic studies include Ross Ashby’s (1948)work on ‘ultrastability’ (the brain’s ability to adapt to perturbations), Kilmer, McCulloch et al’s (1969) work on the heterarchical organisation of the reticular formation and the brain’s ability to make virtually instantaneous decisions about what ‘mode’ to put the body in (fight, flee, eat, sleep and so on), Maturana’s understanding of the brain as an operationally closed neural network and von Foerster’s work on how the brain constructs a stable ‘reality’.

I class as ‘sociocybernetic’ those models that address human psychological processes and human interaction.

Figure 2 is an attempt to show the complex dynamic processes that occur as a human learns. The brain/body system is a bio-mechanical unity (M Individual) that actively seeks and processes variety. As it adapts and habituates to the stimuli captured by the sensory systems, it seeks more variety. A number of bodily processes guide and affect the systems. In figure 2, these are labelled: kinaesthesia, with subsystems the proprioceptive and vestibular systems; interoception (sensing of the body’s internal state), algedonic (pain, pleasure) feedback: endocrine and immune systems. There is also feedback through the environment. Motor responses affect sensory inputs, which inform the learner about where she is and what is happening around her. Familiar settings call forth learned responses. Unfamiliar settings induce learning and adaptation that reduces uncertainty. The figure shows the parts of the system where there is awareness and the learner is conscious with herself of what is happening. As an embodied psychosocial unity (P Individual) the learner may set her own goals and direct her own attention.

Figure 2. The dynamics of learning and awareness

As a graduate researcher, under the supervision of Gordon Pask, I carried out a series of studies of how learners acquire keyboard skills. Learners followed different regimes. In control groups, learner’s followed conventional drill and practice methods. In experimental groups, others were taught using adaptive teaching machines that presented stimuli indicating which keys to press at rates which were adapted to the learner’s degree of success. As part of these studies, in 1975, I constructed a computer program model that gave an account how learning takes place. As an aid to exposition, the model had several versions of increasing complexity. The most complex model was called the “Full Typist” model. Here I give a brief description of how the model works. For more about the Typist models and the experimental studies on which they were based, see Scott and Bansal (2013, 2014)

The model simulates the acquisition of the skill of touch typing. It explains why proficient touch typists (1) lose access to a conscious knowledge of the skill structure and (2) are frequently aware that an error has been made, prior to the receipt of feedback.

The learner is modelled as a dynamical self-organising system in which achievement of goals is subject to a “free energy” economy. Stimuli (key board characters) are presented as a series of discrete events in which the learner has a limited time in which to respond. Feedback is provided about whether or not the response was successful.

Learning is simulated as an evolutionary process: successful ‘operators’ which decide which finger to move in which direction, are selected from a population of possible responses. If energy is available, complex operators, which combine a particular move with a particular finger, may be constructed from simple operators. There is an advantage from doing this as applying a complex operator takes less energy than applying its simple operators separately. A ‘map’ of the keyboard is constructed so inference rules (logical operators) can be used to reduce the set of possible responses. The interaction of operators applied concurrently is simulated by a set of serial executions of the operators that exhausts the set of possible interactions. With proficiency, conscious knowledge of the keyboard ‘map’ is lost.

Proficient performance is characterised by the state of affairs where “which finger with which move” operators are immediately available and applied and where the keyboard map serves only as an internal template or description of the desired goal that non-consciously confirms or disconfirms what was done. A disconfirmation in the model simulates the situation where the proficient typist becomes aware of making an error: his/her daydream is interrupted and he/she is called upon to attend consciously to the task at hand. The theoretical justification for the form of the simulation is that the cognition of the typist is seen as a unitary organisation in which particular processes go on concurrently, autonomously and unconsciously so when they do not conflict. When there is conflict there is uncertainty; the learner becomes aware that something requires her attention. The uncertainty is reduced when the learner decides how to resolve the conflict.

Conversation Theory

The Typist model can be generalised for domains other than touch typing as follows.

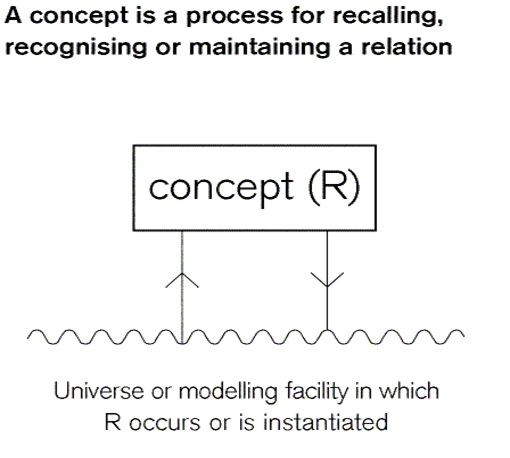

In the model, operators are created and evolve that bring about finger movements and key pressing. In general, there are cognitive operators or processes that bring about or maintain a relation in a (given) universe of interpretation. A useful general name for such processes is ‘concepts’. (See figure 3.) Also in the model there are operators (processes) that create and maintain the processes that bring about finger movement and key pressing. In general there are cognitive operators that bring about or maintain concepts, ‘concepts of concepts’. A useful general name for such processes is ‘memories’. In the Full Typist model, the overall process of acquiring and performing the skill has a cyclic form: knowing leads to doing which leads to further knowing and further doing. The process is a whole that reproduces itself in the context of the domain of touch typing.

Figure 3. A concept is a cognitive process that brings about, recalls, recognises or maintains a relation, R.

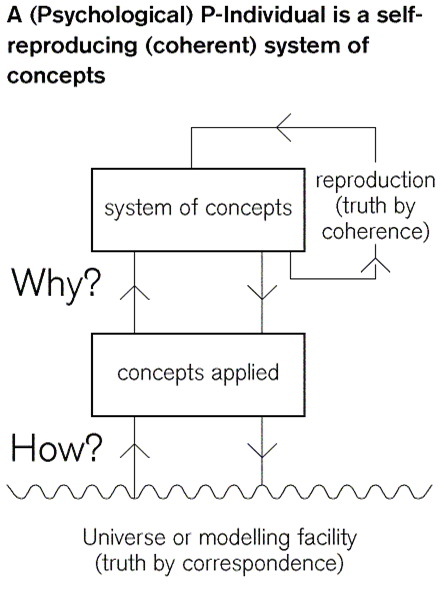

A stable (organisationally closed) system of concepts and memories is what Pask refers to as a P-Individual (Psychological Individual). (See figure 4.) The terminology is due to Pask as used in conversation theory (Pask, Scott and Kallikourdis 1973, Pask 1975). Conversation theory had its beginnings in studies of skill-learning; its scope was much enlarged by studies of the learning of academic subject matter (Pask and Scott 1972, 1973). Scott (1993) provides an historical account of the development of conversation theory. Scott (2009) provides a summary of conversation theory’s key concepts.

Figure 4. A P-Individual

The Typist model explains key aspects of human cognition: how consciously constructed knowledge becomes proceduralised, how conflict in concurrently executed process may engender the conscious awareness of error and uncertainty.The explanations are necessarily second-order: they explain the observer to herself. As constructor of the model and narrator of the theory that gives it significance, I am aware, in conversation with myself, that in writing this article I have been engaged in learning and the acquisition and performance of skills. Suitably generalised, the theory provides an account of its own genesis. one’s intention to solve a problem and one’s understanding of relevant principles serve as constraints to which evolving concepts must fit. The construction of a satisfactory new concept may happen within a few milliseconds or may require deep thought and gestation over a period of days, weeks or a life time.

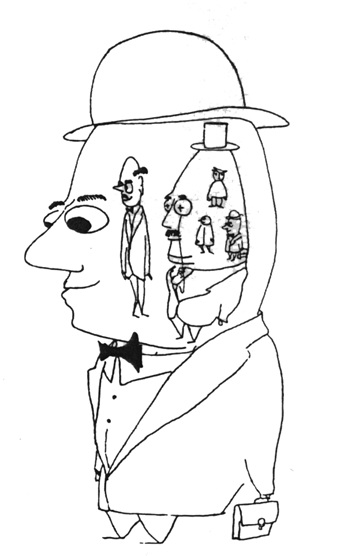

Pask’s general term for the dynamics of the cognitive processes of constructing and reconstructing concepts that occur in conversations with oneself or with others is ‘conceptualisation’. Conceptualisation is conserved (one cannot not conceptualise). This is the ongoing process of thinking, imagining and problem solving. Concepts may be refined as new distinctions are made (for example, dogs are distinguished as different breeds. Concepts may be generalised as distinctions are voided (for example, dogs are seen as members of the class, animals). Concepts are applied in particular contexts of action and interaction (as examples: cycling, doing algebra, playing chess). Pask refers to these as ‘conversational domains’. The domains are related by analogies which map similarities and differences (for example, chess has similarities with draughts and other games). We conceptualise selves and others (see figure 5.)

Figure 5. Conceptualising self and others (original drawing by Gordon Pask)

In conversations, the participants conceptualise each other and each other’s perspectives of the others’ perspectives in the dance of reciprocity. Participants ‘provoke’ (Pask’s term) each other to answer questions, explain matters and demonstrate procedures. They teach their understandings back to each other. They agree and may agree to disagree. In so doing the conversation itself takes the form of a P-Individual, a psychosocial unity.

Creating and maintaining healthy communities

“ Speech has enabled ..us.. to conquer every square inch of landsubjugate every creature … and the creation of an internal self ..” Tom Wolfe, The Kingdom of Speech (2016), p.165.

“With our growing self-consciousness and increasing intelligence we must begin to control tradition and assume a critical attitude toward it, if human relations are ever to change for the better” Albert Einstein (1949).

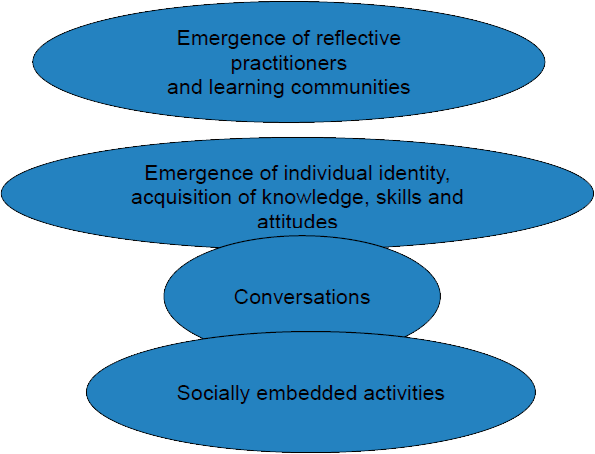

Thus far I have said little about how consciousness arises and how selves are formed in child development nor have I discussed the central role of language (or ‘languaging’, to use Maturana’s preferred term) in these processes. I have dealt with these topics at some length in other papers (Scott, 2007a, 2011c) drawing on classical studies by Jean Piaget, Lev Vygotsky, George Herbert Mead, John and Elizabeth Newson, Humberto Maturana and others. Rather than revisit these topics in detail, I sum up my thinking in figure 6. There I include my concern with how, if we understand the processes in question, we may, beginning with socially embedded activities (working, playing, learning, teaching and child rearing) and the conversations that arise in them, cultivate communities and societies that exhibit the best practices of creative and harmonious living and what I have referred to elsewhere as ‘cybernetic enlightenment’.

Figure 6. Learning, as communities, to do things better.

Sociocybernetic understandings of consciousness can give ethical guidance for how to characterise, create and sustain ‘healthy’ communities in which good will prevails. They also make it possible to characterise pathologies of consciousness, pathological belief systems and pathological communities and to find effective ways of healing them. If we make progress in these matters, we may one day attain the ideal of a ‘truly human society’ as described by Humberto Maturana.

“A truly human society is …. a non-hierarchical society for which all relations of order are constitutively transitory and circumstantial to the creation of relations that continuously negate the institutionalization of human abuse” (Maturana and Varela, 1980, Introduction, Point 15).

As noted earlier, the business of exploring and understanding the human condition is a creative, open-ended process.

References

Ashby, W.R. (1952). Design for a Brain, Chapman & Hall, London.

Ashby, W.R. (1956). Introduction to cybernetics. Wiley, New York.

Avery, J.S. (2016). Information Theory and Evolution. World Scientific, Singapore.

Bateson, G. (1972). “Form, substance and difference”, in Steps to an Ecology of Mind. Intertext Books, London, pp. 454-471.

Einstein, A. (1946). “The Negro Question”. Pageant Magazine, January issue. Available at https://onbeing.org/blog/albert-einsteins-essay-on-racial-bias-in-1946/#.V4KDRQCSh9k.facebook (accessed 20/09/2017).

George, F. (1961). The Brain as a Computer. Pergamon Press, Oxford.

Hacker, P.M.S. (2012). “The sad and sorry history of consciousness: being among other things a challenge to the “consciousness studies community”. Royal Institute of Philosophy, Supplementary Volume 70. Available at http://info.sjc.ox.ac.uk/scr/hacker/docs/ConsciousnessAChallenge.pdf (accessed 15/09/2017).

Jung, C.G. (1916). Septem Sermones ad Mortuos, translated by H. G. Baynes. Reprinted 1967 by Stuart and Watkins, London. The original text is available here: http://gnosis.org/library/7Sermons.htm; accessed 21/07/2017.

Jung, R. (2007). Experience and Action. edition echoraum, Vienna.

Kilmer, W. , McCulloch, W. , & Blum, J. (1969). “A model of the vertebrate central command system”. International Journal of Man–Machine Studies, 1, 279–309.

Korzybski, A. (1933), Science and Sanity, 1st ed., International Non-Aristotelian Library,

Maturana, H. (1969). “Neurophysiology of cognition”. In: Cognition: A Multiple View, Garvin P. L. (ed.). Spartan Books, New York, 3-24.

Maturana, H. (1995). “Biology of self-consciousness”, in Consciousness: Distinction and Reflection. G. Tratteur (ed). Napoli: Bibliopolis, pp. 145-175.

Maturana, H. R. & Varela, F. J. (1980). Autopoiesis and Cognition. D. Reidel, Dordrecht, Holland.

McCulloch, W.S. (1965). Embodiments of Mind. MIT Press, Boston MA.

Mead, G. H. (1934). Mind, Self and Society. (C. W. Morris, ed.). Spartan Books: New York.

Newson, J. & Newson, E. (1979). “Intersubjectivity and the transmission of culture”. In: Oates, J. (ed.) Early Cognitive Development. Croom Helm, London, pp. 281–286.

Pask, G. (1968). “Man as a system that needs to learn”. In Stewart, D., (ed.), Automaton Theory and Learning Systems, London, Academic Press, 1968, 137-208

Pask, G. (1969). “The meaning of cybernetics in the behavioural sciences”, in Rose, J. (ed.), Progress of Cybernetics, Vol. 1, Gordon and Breach, London, pp. 15-45.

Pask, G. (1975a). The Cybernetics of Human Learning and Performance. Hutchinson, London.

Pask, G. (1975b). Conversation, Cognition and Learning. Elsevier, Amsterdam.

Pask, G. (1981.) “Organisational closure of potentially conscious systems”. In: Autopoiesis, Zelany M., (ed.) Elsevier, Amsterdam, pp. 265-307.

Pask, G. (2011). The Cybernetics of Self-Organisation, Learning and Evolution. Papers 1960-1972. Selected and Introduced by Bernard Scott. edition echoraum, Vienna.

Pask, G. and Scott, B. (1972). "Learning strategies and individual competence", Int. J. Man-Machine Studies, 4, pp. 217-253.

Pask, G. and Scott, B. (1972). "Learning strategies and individual competence", Int. J. Man-Machine Studies, 4, pp. 217-253.

Pask, G., Scott, B. and Kallikourdis, D. (1973). "A theory of conversations and individuals (exemplified by the learning process on CASTE)", Int. J. Man-Machine Studies, 5, pp. 443-566.

Piaget, J. (1952). The Language and Thought of the Child, Routledge and Kegan Paul,London.

Piaget, J. (1980). Principles of Genetic Epistemology, Routledge, London.

Prigogine, I. (1980). From Being to Becoming, Freeman, San Francisco, CA.

Schroedinger, E. (1944), What is Life?, Cambridge University Press, Cambridge.

Scott, B. (1987). "Human systems, communication and educational psychology", Educ. Psychol. in Practice, 3, 2, pp. 4-15, chapter 10 in Scott (2011a).

Scott, B. (1993). “Working with Gordon: developing and applying Conversation Theory (1968-1978)”, Systems Research, 10, 3, pp. 167-182.

Scott, B. (2002). “A design for the recursive construction of learning communities”, Int. Rev. Sociology, 12, 2, pp. 257-268, chapter 22 in Scott (2011a).

Scott, B. (2007a). “The co-emergence of parts and wholes in psychological individuation”, Constructivist Foundations, 2, 2/3, pp. 65-71, chapter 28 in Scott (2011a).

Scott, B. (2007b). “Facilitating organisational change: some sociocybernetic principles”, J. of Organisational Transformation and Organisational Change, 4, 1, pp. 3-14, chapter 27 in Scott (2011a)

Scott, B. (2009). “Conversation, individuals and concepts: some key concepts in Gordon Pask’s interaction of actors and conversation theories”, Constructivist Foundations, 4, 3, pp.151-158.

Scott, B. (2010). “The global conversation and the socio-biology of awareness and consciousness”, J. of Sociocybernetics, 7, 2, pp. 21-33, chapter 34 in Scott (2011a).

Scott, B. (2011a). Explorations in Second-Order Cybernetics: Reflections on Cybernetics, Psychology and Education. edition echoraum, Vienna.

Scott, B. (2011b). “Education for cybernetic enlightenment”. Cybernetics and Human Knowing, 21(1-2), pp. 199–205.

Scott, B. (2011c). “Cognition and language”, chapter 9 in Scott (2011a).

Scott, B. (2015). “Minds in chains: a sociocybernetic analysis of the Abrahamic faiths”.J. of Sociocybernetics, 13, 1. https://papiro.unizar.es/ojs/index.php/rc51-jos/article/view/983 .

Scott B. (2016). “Cybernetic foundations for Psychology”. Constructivist Foundations 11(3): 509–517. Available at http://constructivist.info/11/3/509.

Scott, B. and Bansal, A. (2013), “A cybernetic computational model for learning and skill acquisition”, Constructivist Foundations, 9, 1: 125-136. Available at http://constructivist.info/9/1/125

Scott, B and Bansal, A. (2014). “Learning about learning: a cybernetic model of skill acquisition”. Kybernetes, 43, 9/10, pp. 1399-1411.

Shapere, D. (1977), “Scientific theories and their domains”, in Suppe, F. (Ed.), The Structure of Scientific Theories, (2nd ed.), University of Illinois Press, Urbana, IL.

Spencer-Brown, G. (1969). The Laws of Form. London: Allen and Unwin.

Von Demarus, E. (1967). “The logical structure of mind (with an introduction by W. S. McCulloch)”. In L. O. Thayer (Ed.), Communication: Theory and Research. Springfield, IL: Chas. C. Thomas. https://ntrs.nasa.gov/archive/nasa/casi.ntrs.nasa.gov/19650017787.pdf

Von Foerster, H. (1960). “On self-organizing systems and their environments”. In Yovits M. & Cameron S. (eds.), Self-Organizing Systems. Pergamon Press, London: 31-50.

Von Foerster, H. (2003) Understanding Understanding: Essays on Cybernetics and Cognition. Springer, New York.

Von Foerster, H. et al (eds.) (1974). Cybernetics of Cybernetics. BCL Report 73.38, Biological Computer laboratory, Dept. of Electrical Engineering, University of Illinois, Urbana, Illinois.

Vygotsky, L.S. (1962). Thought and Language, Cambridge, Mass., M.I.T. press, Cambridge, Mass.

Whitehead, A.N. (1929).Process and Reality, The Free Press, New York, NY.

Winch, P. (1958), The Idea of a Social Science, Routledge & Kegan Paul, London.

Wolfe, T. (2016). The Kingdom of Speech. Little, Brown & Co., London.

Wohlleben, P. (2017). The Hidden Life of Trees. William Collins, London.

Wulf, W. (2015). The Invention of Nature. John Murray, London.

It may be helpful to note that Aristotle referred to living creatures as being ‘empsucho’, ‘ensouled’ by that which organises and animates them (Greek, psuche, in English, soul or ‘psyche’). The ‘souls’ of plants empower growth and reproduction; the ‘souls’ of animals in addition empower sensation and movement; the ‘souls’ of humans add to this the power to reason.

In recent years, with the help of Abhinav Bansal, the Typist models have been reconstructed. The several versions of the models can be accessed onlinehttp://23.21.163.252/typist/

----------------------------------------------------------------------------

Sociocybernetic Reflections on the Human Condition